认定一件事,即使拿十分力气都无法完成,也要拿出十二分力气去努力——烽火戏诸侯《剑来

写在前面

学习k8s遇到整理笔记

博文内容主要涉及

K8s网络理论体系简述K8s中Calico的实现方案K8s中Calico网络方案容器跨主机通信过程DemoK8s中网络策略方式:egress和ingress的DemoipBlock,namespaceSelector,podSelector的网络策略规则Demo

认定一件事,即使拿十分力气都无法完成,也要拿出十二分力气去努力——烽火戏诸侯《剑来》

Kubernetes网络 K8s中Calico的实现方案

Calico的实现方案

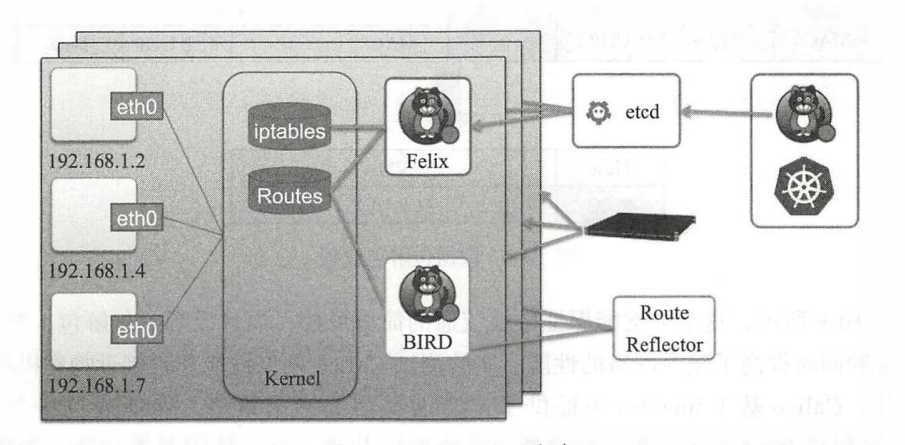

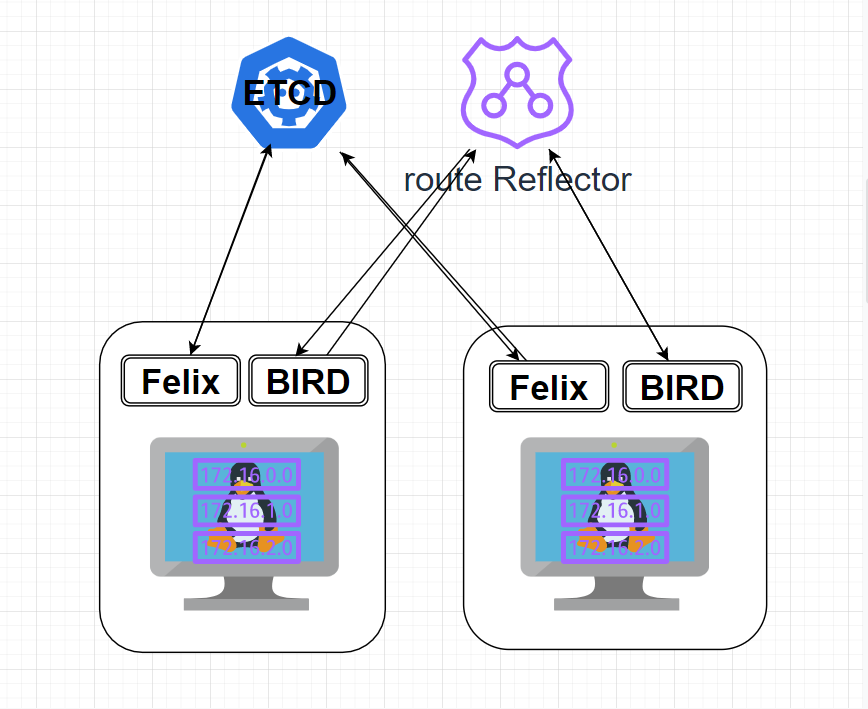

Calico的核心组件包括: Felix, etcd, BGP Client (BIRD)、 BGPRoute Reflector

Felix Calico代理, “跑”在Kubernetes的Node节点上,主要负责配置路由及ACL等信息来确保Endpoint的连通状态。

etcd 分布式键值存储,主要负责网络元数据一致性,确保Calico网络状态的准确性,可以与Kubernetes共用。BGP Client (BIRD) Felix写入Kernel的路由信息分发到当前Calico网络,确保workload间通信的有效性。

BGP Route Reflector 摒弃所有节点互联的Mesh模式,通过一个或者多个BGP Route Reflector来完成集中式路由分发。

将整个互联网的可扩展IP网络原则压缩到数据中心级别, Calico在每一个计算节点利用Linux Kernel实现了一个高效的vRouter来负责数据转发,而每个vRouter通过BGP协议把在其上运行的容器的路由信息向整个Calico网络内传播,小规模部署可以直接互联,大规模下可通过指定的BGP Route Reflector来完成。这样保证最终所有的容器间的数据流量都是通过IP包的方式完成互联的。

基于三层实现通信,在二层上没有任何加密包装,因此只能在私有的可靠网络上使用。

流量隔离基于iptables实现,并且从etcd中获取需要生成的隔离规则,因此会有一些性能上的隐患。

每个主机上都部署了Calico-Node作为虚拟路由器,并且可以通过Calico将宿主机组织成任意的拓扑集群。当集群中的容器需要与外界通信时,就可以通过BGP协议将网关物理路由器加入到集群中,使外界可以直接访问容器IP,而不需要做任何NAT之类的复杂操作。

K8s整体流程图

跨主机Docker网络通信 常见的跨主机通信方案主要有以下几种

形式

描述

Host模式 容器直接使用宿主机的网络,这样天生就可以支持跨主机通信。这种方式虽然可以解决跨主机通信问题,但应用场景很有限,容易出现端口冲突,也无法做到隔离网络环境,一个容器崩溃很可能引起整个宿主机的崩溃。

端口绑定 通过绑定容器端口到宿主机端口,跨主机通信时使用“主机IP+端口的方式访问容器中的服务。显然,**这种方式仅能支持网络栈的4层及以上的应用,·并且容器与宿主机紧耦合,很难灵活地处理问题,可扩展性不佳 **。

定义容器网络 使用Open vSwitch或Flannel等第三方SDN工具,为容器构建可以跨主机通信的网络环境。这类方案一般要求各个主机上的Dockero网桥的cidr不同,以避免出现IP冲突的问题,限制容器在宿主机上可获取的IP范围。并且在容器需要对集群外提供服务时,需要比较复杂的配置,对部署实施人员的网络技能要求比较高。

容器网络发展到现在,形成了两大阵营:

Docker的CNM;

Google, Coreos,Kuberenetes主导的CNI

CNM和CNI是网络规范或者网络体系,并不是网络实现因此并不关心容器网络的实现方式( Flannel或者Calico等), CNM和CNI关心的只是网络管理。

网络类型

描述

CNM (Container Network Model)

CNM的优势在于原生,容器网络和Docker容器,生命周期结合紧密;缺点是被Docker “绑架”。支持CNM网络规范的容器网络实现包括:Docker Swarm overlay, Macvlan & IP networkdrivers, Calico, Contiv, Weave等。

CNI ( Container Network Interface)

CNI的优势是兼容其他容器技术(如rkt)及上层编排系统(Kubernetes&Mesos),而且社区活跃势头迅猛;缺点是非Docker原生。支持CNI网络规范的容器网络实现包括: Kubernetes、 Weave,Macvlan, Calico, Flannel, Contiv.Mesos CNI等。

但从网络实现角度,又可分为:

网络实现角度

描述

隧道方案 隧道方案在laas层的网络中应用也比较多,它的主要缺点是随着节点规模的增长复杂度会提升,而且出了网络问题后跟踪起来比较麻烦,大规模集群情况下这是需要考虑的一个问题

路由方案 一般是基于3层或者2层实现网络隔离和跨主机容器互通的,出了问题也很容易排查。Calico:基于BGP协议的路由方案,支持很细致的ACL控制,对混合云亲和度比较高。Macvlan:从逻辑和Kernel层来看,是隔离性和性能最优的方案。基于二层隔离,所以需要一层路由器支持,大多数云服务商不支持,所以混合云上比较难以实现。

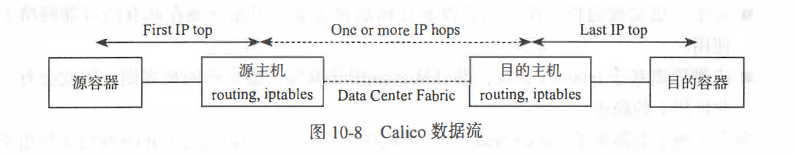

calico通信过程 Calico把每个操作系统的协议栈当作一个路由器 在路由器之间运行标准的路由协议-BGP

Calico方案其实是一个纯三层的方案 每台机器的协议栈的三层去确保两个容器、跨主机容器之间的三层连通性 **。其网络模型如图所示。

网络模型

对于控制平面,其每个Calico节点上会运行两个主要的程序

程序

描述

一个是Felix

它会监听etcd,并从etcd获取事件,如该节点新增容器或者增加IP地址等。当在这个节点上创建出一个容器,并将其网卡、IP, MAC都设置好后,Felix在内核的路由表里面写一条数据,注明这个IP应该配置到这张网卡。

一个标准的路由程序

,它会从内核里面获取哪一些IP的路由发生了变化,然后通过标准BGP的路由协议扩散到整个其他宿主机上,通知外界这个IP在这里。

由于Calico是一种纯三层(网络层)的实现,因此可以避免与二层方案相关的数据包封装的操作,·中间没有任何的NAT,没有任何的Overlay,所以它的转发效率可能是所有方案中最高的。因为它的包直接走原生TCP/IP的协议栈,它的隔离也因为这个栈而变得好做。因为TCP/IP的协议栈提供了一整套的防火墙规则,所以它可以通过iptables的规则达到比较复杂的隔离逻辑。

Calico实现方案

拓扑模式

环境准备 这里我们通过calico来进行跨主机容器网络通信过程演示 ansible网络测试

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m ping 192.168.26.101 | SUCCESS => { "ansible_facts" : { "discovered_interpreter_python" : "/usr/bin/python" }, "changed" : false , "ping" : "pong" } 192.168.26.102 | SUCCESS => { "ansible_facts" : { "discovered_interpreter_python" : "/usr/bin/python" }, "changed" : false , "ping" : "pong" } 192.168.26.100 | SUCCESS => { "ansible_facts" : { "discovered_interpreter_python" : "/usr/bin/python" }, "changed" : false , "ping" : "pong" } ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

etcd集群测试,这里我们已经搭建好一个etcd集群,etcdctl member list查看集群列表

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "etcdctl member list" 192.168.26.102 | CHANGED | rc=0 >> 6f2038a018db1103, started, etcd-100, http://192.168.26.100:2380, http://192.168.26.100:2379,http://localhost:2379 bd330576bb637f25, started, etcd-101, http://192.168.26.101:2380, http://192.168.26.101:2379,http://localhost:2379 fbd8a96cbf1c004d, started, etcd-102, http://192.168.26.102:2380, http://192.168.26.100:2379,http://localhost:2379 192.168.26.101 | CHANGED | rc=0 >> 6f2038a018db1103, started, etcd-100, http://192.168.26.100:2380, http://192.168.26.100:2379,http://localhost:2379 bd330576bb637f25, started, etcd-101, http://192.168.26.101:2380, http://192.168.26.101:2379,http://localhost:2379 fbd8a96cbf1c004d, started, etcd-102, http://192.168.26.102:2380, http://192.168.26.100:2379,http://localhost:2379 192.168.26.100 | CHANGED | rc=0 >> 6f2038a018db1103, started, etcd-100, http://192.168.26.100:2380, http://192.168.26.100:2379,http://localhost:2379 bd330576bb637f25, started, etcd-101, http://192.168.26.101:2380, http://192.168.26.101:2379,http://localhost:2379 fbd8a96cbf1c004d, started, etcd-102, http://192.168.26.102:2380, http://192.168.26.100:2379,http://localhost:2379 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

docker安装启动,修改数据存储位置

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "yum -y install docker-ce" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "systemctl enable docker --now" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "systemctl status docker" 192.168.26.100 | CHANGED | rc=0 >> ● docker.service - Docker Application Container Engine Loaded: loaded (/usr/lib/systemd/system/docker.service; enabled; vendor preset: disabled) Active: active (running) since Sat 2022-01-01 20:27:17 CST; 10min ago Docs: https://docs.docker.com ...

修改docker启动参数:数据存储位置--cluster-store=

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "cat /usr/lib/systemd/system/docker.service | grep containerd.sock" 192.168.26.100 | CHANGED | rc=0 >> ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock 192.168.26.102 | CHANGED | rc=0 >> ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock 192.168.26.101 | CHANGED | rc=0 >> ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock

这里我们直接使用SED来修改

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.100 -m shell -a "sed -i 's#containerd\.sock#containerd.sock --cluster-store=etcd ://192.168.26.100:2379#' /usr/lib/systemd/system/docker.service " 192.168.26.100 | CHANGED | rc=0 >> ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.101 -m shell -a "sed -i 's#containerd\.sock#containerd.sock --cluster-store=etcd://192.168.26.101:2379#' /usr/lib/systemd/system/docker.service " 192.168.26.101 | CHANGED | rc=0 >> ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.102 -m shell -a "sed -i 's#containerd\.sock#containerd.sock --cluster-store=etcd ://192.168.26.102:2379#' /usr/lib/systemd/system/docker.service " 192.168.26.102 | CHANGED | rc=0 >> ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

刷新Service文件,重启docker

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "systemctl daemon-reload; systemctl restart docker" 192.168.26.100 | CHANGED | rc=0 >> 192.168.26.102 | CHANGED | rc=0 >> 192.168.26.101 | CHANGED | rc=0 >> ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "systemctl status docker"

然后我们需要创建calico配置文件,这里我们通过ansilbe 的方式 使用file模块新建文件夹mkdir /etc/calico

1 2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m file -a "path=/etc/calico/ state=directory force=yes"

使用template模块创建配置文件

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$cat calicoctl.j2 apiVersion: v1 kind: calicoApiConfig metadata: spec: datastoreType: "etcdv2" etcdEndpoints: "http://{{inventory_hostname}}:2379" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

calico集群创建配置文件

1 2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m template -a "src=calicoctl.j2 dest=/etc/calico/calicoctl.cfg force=yes"

核对创建的配置文件

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "cat /etc/calico/calicoctl.cfg" 192.168.26.100 | CHANGED | rc=0 >> apiVersion: v1 kind: calicoApiConfig metadata: spec: datastoreType: "etcdv2" etcdEndpoints: "http://192.168.26.100:2379" 192.168.26.102 | CHANGED | rc=0 >> apiVersion: v1 kind: calicoApiConfig metadata: spec: datastoreType: "etcdv2" etcdEndpoints: "http://192.168.26.102:2379" 192.168.26.101 | CHANGED | rc=0 >> apiVersion: v1 kind: calicoApiConfig metadata: spec: datastoreType: "etcdv2" etcdEndpoints: "http://192.168.26.101:2379"

实验相关镜像导入

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m copy -a "src=/root/calico-node-v2.tar dest=/root/" ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "docker load -i /root/calico-node-v2.tar" 192.168.26.100 | CHANGED | rc=0 >> Loaded image: quay.io/calico/node:v2.6.12 192.168.26.102 | CHANGED | rc=0 >> Loaded image: quay.io/calico/node:v2.6.12 192.168.26.101 | CHANGED | rc=0 >> Loaded image: quay.io/calico/node:v2.6.12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

镜像查看

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "docker images" 192.168.26.102 | CHANGED | rc=0 >> REPOSITORY TAG IMAGE ID CREATED SIZE quay.io/calico/node v2.6.12 401cc3e56a1a 3 years ago 281MB 192.168.26.100 | CHANGED | rc=0 >> REPOSITORY TAG IMAGE ID CREATED SIZE quay.io/calico/node v2.6.12 401cc3e56a1a 3 years ago 281MB 192.168.26.101 | CHANGED | rc=0 >> REPOSITORY TAG IMAGE ID CREATED SIZE quay.io/calico/node v2.6.12 401cc3e56a1a 3 years ago 281MB ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

calicoctl 工具导入

1 2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m copy -a "src=/root/calicoctl dest=/bin/ mode=+x"

搭建Calico网络 开始建立 calico node 信息:每个主机上都部署了Calico/Node作为虚拟路由器

1 2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "calicoctl node run --node-image=quay.io/calico/node:v2.6.12 -c /etc/calico/calicoctl.cfg"

查看node状态,通过Calico将宿主机组织成任意的拓扑集群

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "calicoctl node status" 192.168.26.102 | CHANGED | rc=0 >> Calico process is running. IPv4 BGP status +----------------+-------------------+-------+----------+-------------+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +----------------+-------------------+-------+----------+-------------+ | 192.168.26.100 | node-to-node mesh | up | 14:46:35 | Established | | 192.168.26.101 | node-to-node mesh | up | 14:46:34 | Established | +----------------+-------------------+-------+----------+-------------+ IPv6 BGP status No IPv6 peers found. 192.168.26.101 | CHANGED | rc=0 >> Calico process is running. IPv4 BGP status +----------------+-------------------+-------+----------+-------------+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +----------------+-------------------+-------+----------+-------------+ | 192.168.26.100 | node-to-node mesh | up | 14:46:31 | Established | | 192.168.26.102 | node-to-node mesh | up | 14:46:34 | Established | +----------------+-------------------+-------+----------+-------------+ IPv6 BGP status No IPv6 peers found. 192.168.26.100 | CHANGED | rc=0 >> Calico process is running. IPv4 BGP status +----------------+-------------------+-------+----------+-------------+ | PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO | +----------------+-------------------+-------+----------+-------------+ | 192.168.26.101 | node-to-node mesh | up | 14:46:31 | Established | | 192.168.26.102 | node-to-node mesh | up | 14:46:35 | Established | +----------------+-------------------+-------+----------+-------------+ IPv6 BGP status No IPv6 peers found. ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

当集群中的容器需要与外界通信时,就可以通过BGP协议将网关物理路由器加入到集群中,使外界可以直接访问容器IP,而不需要做任何NAT之类的复杂操作。

通过Calico网络实现跨主机通信 在某一个Node上创建一个docker内部calico网络

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible 192.168.26.100 -m shell -a "docker network create --driver calico --ipam-driver calico-ipam calnet1" 192.168.26.100 | CHANGED | rc=0 >> 58121f89bcddec441770aa207ef662d09e4413625b0827ce4d8f601fb10650d0

会发现这个内网网络变成的一个全局的网络,在所有节点可见,58121f89bcdd

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "docker network list" 192.168.26.100 | CHANGED | rc=0 >> NETWORK ID NAME DRIVER SCOPE caa87ba3dd86 bridge bridge local 58121f89bcdd calnet1 calico global 1d63e3ad385f host host local adc94f172d5f none null local 192.168.26.102 | CHANGED | rc=0 >> NETWORK ID NAME DRIVER SCOPE cc37d3c66e2f bridge bridge local 58121f89bcdd calnet1 calico global 3b138015d4ab host host local 7481614a7084 none null local 192.168.26.101 | CHANGED | rc=0 >> NETWORK ID NAME DRIVER SCOPE d0cb224ed111 bridge bridge local 58121f89bcdd calnet1 calico global 106e1c9fb3d3 host host local f983021e2a02 none null local ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

查看节点中的网卡信息,这个时候没有容器运行,所以没有caliao网卡

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "ip a" 192.168.26.102 | CHANGED | rc=0 >> 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:0f:98:f1 brd ff:ff:ff:ff:ff:ff inet 192.168.26.102/24 brd 192.168.26.255 scope global ens32 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe0f:98f1/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:c3:28:19:78 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever 192.168.26.100 | CHANGED | rc=0 >> 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:8c:e8:1a brd ff:ff:ff:ff:ff:ff inet 192.168.26.100/24 brd 192.168.26.255 scope global ens32 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe8c:e81a/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:f7:1a:2e:30 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever 192.168.26.101 | CHANGED | rc=0 >> 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:3b:6e:ef brd ff:ff:ff:ff:ff:ff inet 192.168.26.101/24 brd 192.168.26.255 scope global ens32 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fe3b:6eef/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:70:a7:4e:7e brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

每个节点运行一个容器

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "docker run --name {{inventory_hostname}} -itd --net=calnet1 --restart=always busybox " 192.168.26.101 | CHANGED | rc=0 >> cf2ff4b65e6343fa6e9afba6e75376b97ac47ea59c35f3c492bb7051c15627f0 192.168.26.100 | CHANGED | rc=0 >> 065724c073ded04d6df41d295be3cd5585f8683664fd42a3953dc8067195c58e 192.168.26.102 | CHANGED | rc=0 >> 82e4d6dfde5a6e51f9a4d4f86909678a42e8d1e2d9bfa6edd9cc258b37dfc2db

查看容器节点信息

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "docker ps" 192.168.26.102 | CHANGED | rc=0 >> CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 82e4d6dfde5a busybox "sh" About a minute ago Up About a minute 192.168.26.102 c2d2ab904d6d quay.io/calico/node:v2.6.12 "start_runit" 2 hours ago Up 2 hours calico-node 192.168.26.100 | CHANGED | rc=0 >> CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 065724c073de busybox "sh" About a minute ago Up About a minute 192.168.26.100 f0b150a924d9 quay.io/calico/node:v2.6.12 "start_runit" 2 hours ago Up 2 hours calico-node 192.168.26.101 | CHANGED | rc=0 >> CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES cf2ff4b65e63 busybox "sh" About a minute ago Up About a minute 192.168.26.101 0e4e6f005797 quay.io/calico/node:v2.6.12 "start_runit" 2 hours ago Up 2 hours calico-node ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

查看每个容器的内部网卡和IP

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "docker exec -it {{inventory_hostname}} ip a | grep cali0 -A 4" 192.168.26.100 | CHANGED | rc=0 >> 4: cali0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff inet 192.168.239.128/32 scope global cali0 valid_lft forever preferred_lft forever 192.168.26.102 | CHANGED | rc=0 >> 4: cali0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff inet 192.168.63.64/32 scope global cali0 valid_lft forever preferred_lft forever 192.168.26.101 | CHANGED | rc=0 >> 4: cali0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff inet 192.168.198.0/32 scope global cali0 valid_lft forever preferred_lft forever ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

查看容器内的路由关系,即所有的出口都是通过cali0网卡来实现的

1 2 3 4 5 6 7 8 9 10 11 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "docker exec -it {{inventory_hostname}} ip route | grep cali0 " 192.168.26.101 | CHANGED | rc=0 >> default via 169.254.1.1 dev cali0 169.254.1.1 dev cali0 scope link 192.168.26.102 | CHANGED | rc=0 >> default via 169.254.1.1 dev cali0 169.254.1.1 dev cali0 scope link 192.168.26.100 | CHANGED | rc=0 >> default via 169.254.1.1 dev cali0 169.254.1.1 dev cali0 scope link

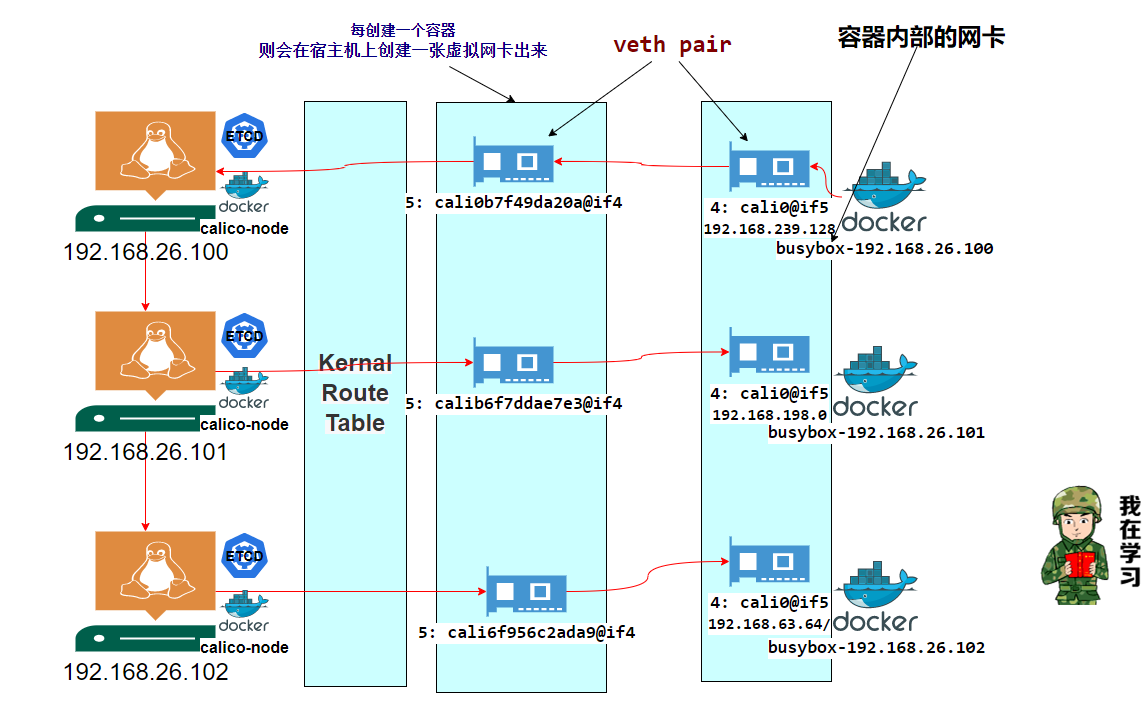

每创建一个容器,则会在物理机上创建一张虚拟网卡出来,对应容器中的网卡,从这里可以看到容器里的虚拟网卡 cali0 和物理机的 cali6f956c2ada9 是 veth pair 关系。

关于veth pair 小伙伴可以百度下,这里简单描述,作用很简单,就是要把从一个 network namespace 发出的数据包转发到另一个 namespace。veth 设备是成对的,一个是container之中,另一个在container之外(宿主机),即在真实机器上能看到的。VETH设备总是成对出现,送到一端请求发送的数据总是从另一端以请求接受的形式出现。创建并配置正确后,向其一端输入数据,VETH会改变数据的方向并将其送入内核网络子系统,完成数据的注入,而在另一端则能读到此数据。(Namespace,其中往veth设备上任意一端上RX到的数据,都会在另一端上以TX的方式发送出去)veth工作在L2数据链路层,veth-pair设备在转发数据包过程中并不串改数据包内容。

更多小伙伴可以参考:https://blog.csdn.net/sld880311/article/details/77650937

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "ip a | grep -A 4 cali" 192.168.26.102 | CHANGED | rc=0 >> 5: cali6f956c2ada9@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP link/ether 6a:65:54:1a:19:e6 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::6865:54ff:fe1a:19e6/64 scope link valid_lft forever preferred_lft forever 192.168.26.100 | CHANGED | rc=0 >> 5: cali0b7f49da20a@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP link/ether 9e:da:0e:cc:b3:7e brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::9cda:eff:fecc:b37e/64 scope link valid_lft forever preferred_lft forever 192.168.26.101 | CHANGED | rc=0 >> 5: calib6f7ddae7e3@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UP link/ether 1e:e6:16:ae:f0:91 brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::1ce6:16ff:feae:f091/64 scope link valid_lft forever preferred_lft forever ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

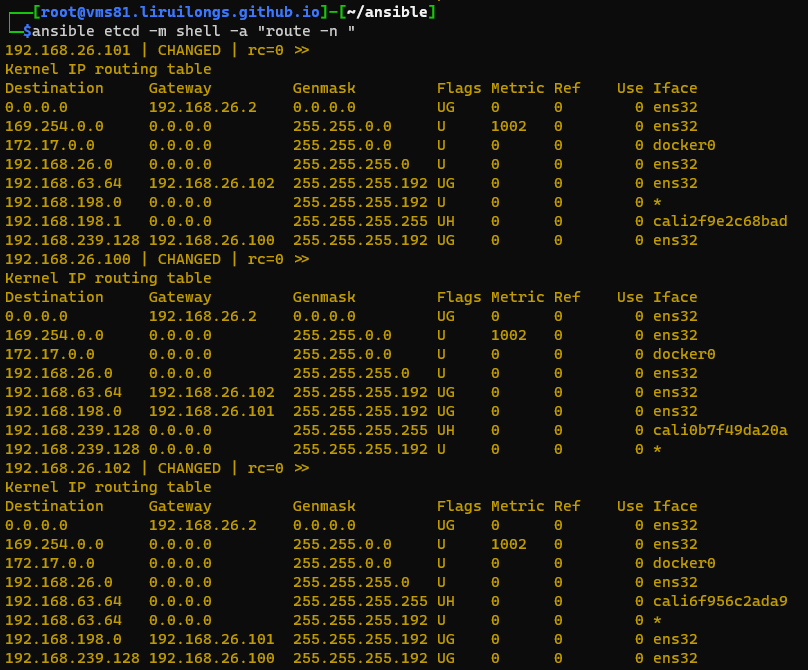

查看宿主机路由关系

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "ip route " 192.168.26.101 | CHANGED | rc=0 >> default via 192.168.26.2 dev ens32 169.254.0.0/16 dev ens32 scope link metric 1002 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 192.168.26.0/24 dev ens32 proto kernel scope link src 192.168.26.101 192.168.63.64/26 via 192.168.26.102 dev ens32 proto bird blackhole 192.168.198.0/26 proto bird 192.168.198.1 dev cali2f9e2c68bad scope link 192.168.239.128/26 via 192.168.26.100 dev ens32 proto bird 192.168.26.100 | CHANGED | rc=0 >> default via 192.168.26.2 dev ens32 169.254.0.0/16 dev ens32 scope link metric 1002 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 192.168.26.0/24 dev ens32 proto kernel scope link src 192.168.26.100 192.168.63.64/26 via 192.168.26.102 dev ens32 proto bird 192.168.198.0/26 via 192.168.26.101 dev ens32 proto bird 192.168.239.128 dev cali0b7f49da20a scope link blackhole 192.168.239.128/26 proto bird 192.168.26.102 | CHANGED | rc=0 >> default via 192.168.26.2 dev ens32 169.254.0.0/16 dev ens32 scope link metric 1002 172.17.0.0/16 dev docker0 proto kernel scope link src 172.17.0.1 192.168.26.0/24 dev ens32 proto kernel scope link src 192.168.26.102 192.168.63.64 dev cali6f956c2ada9 scope link blackhole 192.168.63.64/26 proto bird 192.168.198.0/26 via 192.168.26.101 dev ens32 proto bird 192.168.239.128/26 via 192.168.26.100 dev ens32 proto bird ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

我们那其中一台机器来看:192.168.26.100宿主机来讲

192.168.239.128 dev cali0b7f49da20a scope link

进去:本机到目的地址到 容器IP(192.168.239.128 ) 的数据包都从 cali6f956c2ada9 (新产生的虚拟网卡)走。

192.168.63.64/26 via 192.168.26.102 dev ens32 proto bird

出来:本机目的地址到 容器IP(192.168.63.64/26) 容器IP(192.168.198.0/26) 网段的数据包都从 ens32 发到 其他的两个宿主机上去。

每台主机都知道不同的容器在哪台主机上,所以会动态的设置路由。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ansible etcd -m shell -a "route -n " 192.168.26.101 | CHANGED | rc=0 >> Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.26.2 0.0.0.0 UG 0 0 0 ens32 169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens32 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 192.168.26.0 0.0.0.0 255.255.255.0 U 0 0 0 ens32 192.168.63.64 192.168.26.102 255.255.255.192 UG 0 0 0 ens32 192.168.198.0 0.0.0.0 255.255.255.192 U 0 0 0 * 192.168.198.1 0.0.0.0 255.255.255.255 UH 0 0 0 cali2f9e2c68bad 192.168.239.128 192.168.26.100 255.255.255.192 UG 0 0 0 ens32 192.168.26.100 | CHANGED | rc=0 >> Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.26.2 0.0.0.0 UG 0 0 0 ens32 169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens32 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 192.168.26.0 0.0.0.0 255.255.255.0 U 0 0 0 ens32 192.168.63.64 192.168.26.102 255.255.255.192 UG 0 0 0 ens32 192.168.198.0 192.168.26.101 255.255.255.192 UG 0 0 0 ens32 192.168.239.128 0.0.0.0 255.255.255.255 UH 0 0 0 cali0b7f49da20a 192.168.239.128 0.0.0.0 255.255.255.192 U 0 0 0 * 192.168.26.102 | CHANGED | rc=0 >> Kernel IP routing table Destination Gateway Genmask Flags Metric Ref Use Iface 0.0.0.0 192.168.26.2 0.0.0.0 UG 0 0 0 ens32 169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 ens32 172.17.0.0 0.0.0.0 255.255.0.0 U 0 0 0 docker0 192.168.26.0 0.0.0.0 255.255.255.0 U 0 0 0 ens32 192.168.63.64 0.0.0.0 255.255.255.255 UH 0 0 0 cali6f956c2ada9 192.168.63.64 0.0.0.0 255.255.255.192 U 0 0 0 * 192.168.198.0 192.168.26.101 255.255.255.192 UG 0 0 0 ens32 192.168.239.128 192.168.26.100 255.255.255.192 UG 0 0 0 ens32 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

简单测试一下:192.168.26.100宿主机上的容器(192.168.239.128)去ping 192.168.63.64(192.168.26.100上的容器),实现跨主机互通。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 ┌──[root@vms100.liruilongs.github.io]-[~] └─$ docker exec -it 192.168.26.100 /bin/sh / bin dev etc home proc root sys tmp usr var / 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 4: cali0@if5: <BROADCAST,MULTICAST,UP,LOWER_UP,M-DOWN> mtu 1500 qdisc noqueue link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff inet 192.168.239.128/32 scope global cali0 valid_lft forever preferred_lft forever / PING 192.168.63.64 (192.168.63.64): 56 data bytes 64 bytes from 192.168.63.64: seq=0 ttl=62 time=18.519 ms 64 bytes from 192.168.63.64: seq=1 ttl=62 time=0.950 ms 64 bytes from 192.168.63.64: seq=2 ttl=62 time=1.086 ms 64 bytes from 192.168.63.64: seq=3 ttl=62 time=0.846 ms 64 bytes from 192.168.63.64: seq=4 ttl=62 time=0.840 ms 64 bytes from 192.168.63.64: seq=5 ttl=62 time=1.151 ms 64 bytes from 192.168.63.64: seq=6 ttl=62 time=0.888 ms ^C --- 192.168.63.64 ping statistics --- 7 packets transmitted, 7 packets received, 0% packet loss round-trip min/avg/max = 0.840/3.468/18.519 ms /

在K8s集群的中,有一个容器,就会生成一个calico网卡

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$ip a 1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN qlen 1 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever inet6 ::1/128 scope host valid_lft forever preferred_lft forever 2: ens32: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000 link/ether 00:0c:29:ad:e3:93 brd ff:ff:ff:ff:ff:ff inet 192.168.26.81/24 brd 192.168.26.255 scope global ens32 valid_lft forever preferred_lft forever inet6 fe80::20c:29ff:fead:e393/64 scope link valid_lft forever preferred_lft forever 3: docker0: <NO-CARRIER,BROADCAST,MULTICAST,UP> mtu 1500 qdisc noqueue state DOWN link/ether 02:42:0a:9e:7d:44 brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever 4: tunl0@NONE: <NOARP,UP,LOWER_UP> mtu 1480 qdisc noqueue state UNKNOWN qlen 1 link/ipip 0.0.0.0 brd 0.0.0.0 inet 10.244.88.64/32 scope global tunl0 valid_lft forever preferred_lft forever 5: cali12cf25006b5@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 0 inet6 fe80::ecee:eeff:feee:eeee/64 scope link valid_lft forever preferred_lft forever 6: cali5a282a7bbb0@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 1 inet6 fe80::ecee:eeff:feee:eeee/64 scope link valid_lft forever preferred_lft forever 7: calicb34164ec79@if4: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1480 qdisc noqueue state UP link/ether ee:ee:ee:ee:ee:ee brd ff:ff:ff:ff:ff:ff link-netnsid 2 inet6 fe80::ecee:eeff:feee:eeee/64 scope link valid_lft forever preferred_lft forever ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$

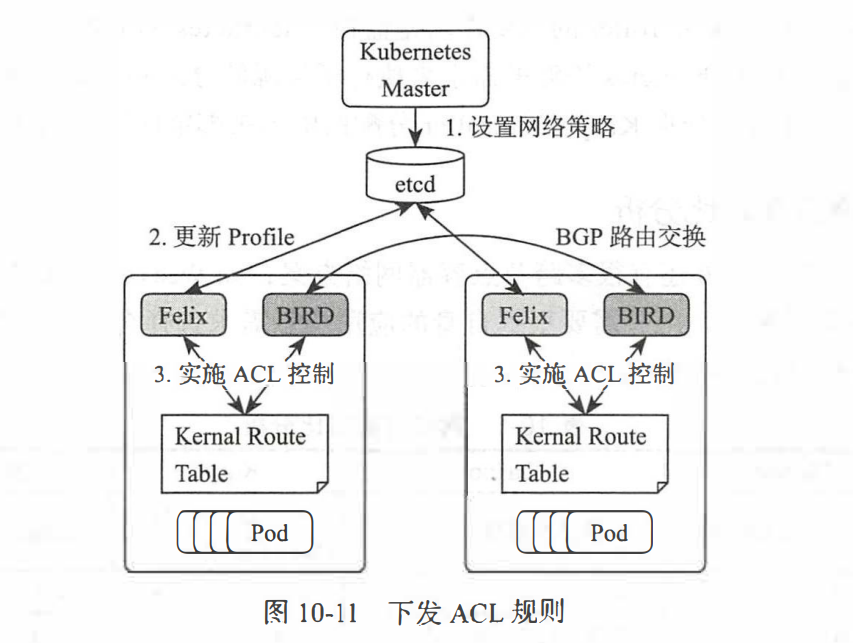

Kubernetes网络策略

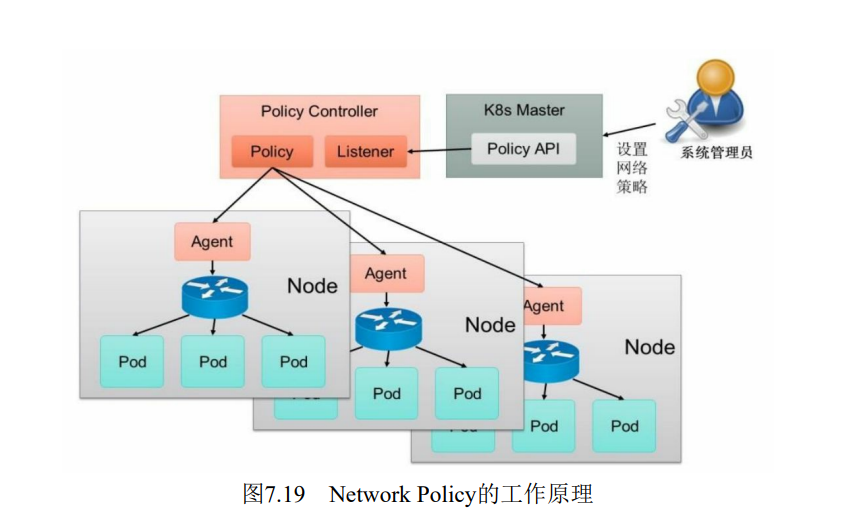

为了实现细粒度的容器间网络访问隔离策略(防火墙), Kubernetes从1.3版本开始,由SIG-Network小组主导研发了Network Policy机制,目前已升级为networking.k8s.io/v1稳定版本。

Network Policy的主要功能是对Pod间的网络通信进行限制和准入控制

设置方式为将Pod的Label作为查询条件,设置允许访问或禁止访问的客户端Pod列表。查询条件可以作用于Pod和Namespace级别。

为了使用Network Policy, Kubernetes引入了一个新的资源对象NetworkPolicy,供用户设置Pod间网络访问的策略。但仅定义一个网络策略是无法完成实际的网络隔离的,还需要一个策略控制器(PolicyController)进行策略的实现。

策略控制器由第三方网络组件提供,目前Calico, Cilium, Kube-router, Romana, Weave Net等开源项目均支持网络策略的实现。Network Policy的工作原理如图

policy controller需要实现一个API Listener,监听用户设置的NetworkPolicy定义,并将网络访问规则通过各Node的Agent进行实际设置(Agent则需要通过CNI网络插件实现)

网络策略配置说明 网络策略的设置主要用于对目标Pod的网络访问进行限制,在默认·情况下对所有Pod都是允许访问的,在设置了指向Pod的NetworkPolicy网络策略之后,到Pod的访问才会被限制。需要注意的是网络策略是基于Pod的

NetWorkPolicy基于命名空间进行限制,即只作用当前命名空间,分为两种:

ingress:定义允许访问目标Pod的入站白名单规则 egress: 定义目标Pod允许访问的“出站”白名单规则

具体的规则限制方式分为三种(需要注意的是,多个限制之间是或的逻辑关系,如果希望变成与的关系,yaml文件需要配置为数组

下面是一个资源文件的Demo

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy namespace: default spec: podSelector: matchLabels: role: db policyTypes: - Ingress - Egress ingress: - from: - ipBlock: cidr: 172.17 .0 .0 /16 except: - 172.17 .1 .0 /24 - namespaceSelector: matchLabels: project: myproject - podSelector: matchLabels: role: frontend ports: - protocol: TCP port: 6379 egress: - to: - ipBlock: cidr: 10.0 .0 .0 /24 ports: - protocol: TCP port: 5978

在Namespace级别设置默认的网络策略

在Namespace级别还可以设置一些默认的全局网络策略,以方便管理员对整个Namespace进行统一的网络策略设置。

默认拒绝所有入站流量 1 2 3 4 5 6 7 8 9 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: default-deny-ingress spec: podSelector: {} policyTypes: - Ingress

默认允许所有入站流量 1 2 3 4 5 6 7 8 9 10 11 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-all-ingress spec: podSelector: {} ingress: - {} policyTypes: - Ingress

默认拒绝所有出站流量 1 2 3 4 5 6 7 8 9 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: default-deny-egress spec: podSelector: {} policyTypes: - Egress

默认允许所有出站流量 1 2 3 4 5 6 7 8 9 10 11 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-all-egress spec: podSelector: {} egress: - {} policyTypes: - Egress

默认拒绝所有入口和所有出站流量 1 2 3 4 5 6 7 8 9 10 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: default-deny-all spec: podSelector: {} policyTypes: - Ingress - Egress

NetworkPolicy的发展

作为一个稳定特性,SCTP支持默认是被启用的。 要在集群层面禁用 SCTP,你(或你的集群管理员)需要为API服务器指定 --feature-gates=SCTPSupport=false,… 来禁用 SCTPSupport特性门控。 启用该特性门控后,用户可以将 NetworkPolicy 的protocol字段设置为 SCTP(不同版本略有区别)

NetWorkPolicy实战 环境准备 先创建两个没有任何策略的SVC

1 2 3 4 5 6 7 8 9 10 11 12 13 14 ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$d =k8s-network-create ┌──[root@vms81.liruilongs.github.io]-[~/ansible] └─$mkdir $d ;cd $d ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl create ns liruilong-network-create namespace/liruilong-network-create created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl config set-context $(kubectl config current-context) --namespace=liruilong-network-createContext "kubernetes-admin@kubernetes" modified. ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl config view | grep namespace namespace: liruilong-network-create ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$

我们先构造两个pod,为两个SVC提供能力

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl run pod1 --image=nginx --image-pull-policy=IfNotPresent pod/pod1 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl run pod2 --image=nginx --image-pull-policy=IfNotPresent pod/pod2 created ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get pods -o wide NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES pod1 1/1 Running 0 35s 10.244.70.31 vms83.liruilongs.github.io <none> <none> pod2 1/1 Running 0 21s 10.244.171.181 vms82.liruilongs.github.io <none> <none> ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$

然后我们分别修改pod中Ngixn容器主页

1 2 3 4 5 6 7 8 9 10 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS pod1 1/1 Running 0 100s run=pod1 pod2 1/1 Running 0 86s run=pod2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl exec -it pod1 -- sh -c "echo pod1 >/usr/share/nginx/html/index.html" ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl exec -it pod2 -- sh -c "echo pod2 >/usr/share/nginx/html/index.html"

创建两个SVC

1 2 3 4 5 6 7 8 9 10 11 12 13 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl expose --name=svc1 pod pod1 --port=80 --type =LoadBalancer service/svc1 exposed ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl expose --name=svc2 pod pod2 --port=80 --type =LoadBalancer service/svc2 exposed ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE svc1 LoadBalancer 10.106.61.84 192.168.26.240 80:30735/TCP 14s svc2 LoadBalancer 10.111.123.194 192.168.26.241 80:31034/TCP 5s ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$

访问测试,无论在当前命名空间还是在指定命名空间,都可以相互访问

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent If you don'' t see a command prompt, try pressing enter. /home pod1 /home pod2 /home

指定命名空间

1 2 3 4 5 6 7 8 9 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl run testpod2 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent -n default If you don''t see a command prompt, try pressing enter. /home # curl svc1.liruilong-network-create pod1 /home # curl svc2.liruilong-network-create pod2 /home #

由于使用了LB,所以物理机也可以访问

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 PS E:\docker> curl 192.168.26.240 StatusCode : 200 StatusDescription : OK Content : pod1 RawContent : HTTP/1.1 200 OK Connection: keep-alive Accept-Ranges: bytes Content-Length: 5 Content-Type: text/html Date: Mon, 03 Jan 2022 12:29:32 GMT ETag: "61d27744-5" Last-Modified: Mon, 03 Jan 2022 04:1... Forms : {} Headers : {[Connection, keep-alive], [Accept-Ranges, bytes], [Content-Lengt h, 5], [Content-Type, text/html]...} Images : {} InputFields : {} Links : {} ParsedHtml : System.__ComObject RawContentLength : 5

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 PS E:\docker> curl 192.168.26.241 StatusCode : 200 StatusDescription : OK Content : pod2 RawContent : HTTP/1.1 200 OK Connection: keep-alive Accept-Ranges: bytes Content-Length: 5 Content-Type: text/html Date: Mon, 03 Jan 2022 12:29:49 GMT ETag: "61d27752-5" Last-Modified: Mon, 03 Jan 2022 04:1... Forms : {} Headers : {[Connection, keep-alive], [Accept-Ranges, bytes], [Content-Lengt h, 5], [Content-Type, text/html]...} Images : {} InputFields : {} Links : {} ParsedHtml : System.__ComObject RawContentLength : 5 PS E:\docker>

进入策略 下面我们看一下进入的策略

1 2 3 4 5 6 7 8 9 10 11 12 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS pod1 1/1 Running 2 (3d12h ago) 5d9h run=pod1 pod2 1/1 Running 2 (3d12h ago) 5d9h run=pod2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get svc -o wide NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR svc1 LoadBalancer 10.106.61.84 192.168.26.240 80:30735/TCP 5d9h run=pod1 svc2 LoadBalancer 10.111.123.194 192.168.26.241 80:31034/TCP 5d9h run=pod2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$

测试的外部物理机机器IP

1 2 3 4 5 6 7 8 9 10 11 12 PS E:\docker> ipconfig Windows IP 配置 .......... 以太网适配器 VMware Network Adapter VMnet8: 连接特定的 DNS 后缀 . . . . . . . : 本地链接 IPv6 地址. . . . . . . . : fe80::f9c8:e941:4deb:698f%24 IPv4 地址 . . . . . . . . . . . . : 192.168.26.1 子网掩码 . . . . . . . . . . . . : 255.255.255.0 默认网关. . . . . . . . . . . . . :

IP限制 我们通过修改ip限制来演示网路策略,通过宿主机所在物理机访问。当设置指定网段可以访问,不是指定网段不可以访问

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$vim networkpolicy.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl apply -f networkpolicy.yaml networkpolicy.networking.k8s.io/test-network-policy configured

编写资源文件,允许172.17.0.0/16网段的机器访问

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy namespace: liruilong-network-create spec: podSelector: matchLabels: run: pod1 policyTypes: - Ingress ingress: - from: - ipBlock: cidr: 172.17 .0 .0 /16 ports: - protocol: TCP port: 80

集群外部机器无法访问

1 2 3 4 5 6 7 8 9 PS E:\docker> curl 192.168.26.240 curl : 无法连接到远程服务器 所在位置 行:1 字符: 1 + curl 192.168.26.240 + ~~~~~~~~~~~~~~~~~~~ + CategoryInfo : InvalidOperation: (System.Net.HttpWebRequest:HttpWebRequest) [Invoke-WebRequest],WebExce ption + FullyQualifiedErrorId : WebCmdletWebResponseException,Microsoft.PowerShell.Commands.InvokeWebRequestCommand

配置允许当前网段的ip访问

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy namespace: liruilong-network-create spec: podSelector: matchLabels: run: pod1 policyTypes: - Ingress ingress: - from: - ipBlock: cidr: 192.168 .26 .0 /24 ports: - protocol: TCP port: 80

修改网段之后正常外部机器可以访问

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$sed -i 's#172.17.0.0/16#192.168.26.0/24#' networkpolicy.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl apply -f networkpolicy.yaml

测试,外部机器可以访问

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 PS E:\docker> curl 192.168.26.240 StatusCode : 200 StatusDescription : OK Content : pod1 RawContent : HTTP/1.1 200 OK Connection: keep-alive Accept-Ranges: bytes Content-Length: 5 Content-Type: text/html Date: Sat, 08 Jan 2022 14:59:13 GMT ETag: "61d9a663-5" Last-Modified: Sat, 08 Jan 2022 14:5... Forms : {} Headers : {[Connection, keep-alive], [Accept-Ranges, bytes], [Content-Length, 5], [Content-T ype, text/html]...} Images : {} InputFields : {} Links : {} ParsedHtml : System.__ComObject RawContentLength : 5

命名空间限制 设置只允许default命名空间的数据通过

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get ns --show-labels | grep default default Active 26d kubernetes.io/metadata.name=default ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$vim networkpolicy-name.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl apply -f networkpolicy-name.yaml networkpolicy.networking.k8s.io/test-network-policy configured

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy namespace: liruilong-network-create spec: podSelector: matchLabels: run: pod1 policyTypes: - Ingress ingress: - from: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: default ports: - protocol: TCP port: 80

宿主机所在物理机无法访问访问

1 2 3 4 5 6 7 8 9 10 11 PS E:\docker> curl 192.168.26.240 curl : 无法连接到远程服务器 所在位置 行:1 字符: 1 + curl 192.168.26.240 + ~~~~~~~~~~~~~~~~~~~ + CategoryInfo : InvalidOperation: (System.Net.HttpWebRequest:HttpWebRequest) [Invoke-WebR equest],WebException + FullyQualifiedErrorId : WebCmdletWebResponseException,Microsoft.PowerShell.Commands.InvokeWebRequ estCommand PS E:\docker>

当前命名空间也无法访问

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent /home curl: (28) Connection timed out after 10413 milliseconds

default命名空间可以访问

1 2 3 4 5 6 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent --namespace=default /home pod1 /home

pod选择器限制 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy namespace: liruilong-network-create spec: podSelector: matchLabels: run: pod1 policyTypes: - Ingress ingress: - from: - podSelector: matchLabels: run: testpod ports: - protocol: TCP port: 80

创建一个策略,只允许标签为run=testpod的pod访问

1 2 3 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl apply -f networkpolicy-pod.yaml networkpolicy.networking.k8s.io/test-network-policy created

创建两个pod,都设置--labels=run=testpod标签,只有当前命名空间可以访问

1 2 3 4 5 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent --labels=run=testpod --namespace=default /home curl: (28) Connection timed out after 10697 milliseconds

1 2 3 4 5 6 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl run testpod1 -it --rm --image=yauritux/busybox-curl --image-pull-policy=IfNotPresent --labels=run=testpod /home pod1 /home

下面的设置可以其他所有命名空间可以访问

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy namespace: liruilong-network-create spec: podSelector: matchLabels: run: pod1 policyTypes: - Ingress ingress: - from: - podSelector: matchLabels: podSelector: matchLabels: run: testpod ports: - protocol: TCP port: 80

default 命名空间和当前命名空间可以访问

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy namespace: liruilong-network-create spec: podSelector: matchLabels: run: pod1 policyTypes: - Ingress ingress: - from: - podSelector: matchLabels: kubernetes.io/metadata.name: default podSelector: matchLabels: run: testpod - podSelector: matchLabels: run: testpod ports: - protocol: TCP port: 80

定位pod所使用的网络策略 1 2 3 4 5 6 7 8 9 10 11 12 13 14 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get networkpolicies NAME POD-SELECTOR AGE test-network-policy run=pod1 13m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get pods --show-labels NAME READY STATUS RESTARTS AGE LABELS pod1 1/1 Running 2 (3d15h ago) 5d12h run=pod1 pod2 1/1 Running 2 (3d15h ago) 5d12h run=pod2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get networkpolicies | grep run=pod1 test-network-policy run=pod1 15m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$

出去策略 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy spec: podSelector: matchLabels: run: pod1 policyTypes: - Egress egress: - to: - podSelector: matchLabels: run: pod2 ports: - protocol: TCP port: 80

pod1只能访问pod2的TCP协议80端口

1 2 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl apply -f networkpolicy1.yaml

通过IP访问正常pod2

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl exec -it pod1 -- bash root@pod1:/ pod2

因为DNS的pod在另一个命名空间(kube-system)运行,pod1只能到pod,所以无法通过域名访问,需要添加另一个命名空间

1 2 3 4 ┌──[root@vms81.liruilongs.github.io]-[~] └─$kubectl exec -it pod1 -- bash root@pod1:/ ^C

相关参数获取

1 2 3 4 5 6 7 8 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get ns --show-labels | grep kube-system kube-system Active 27d kubernetes.io/metadata.name=kube-system ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get pods --show-labels -n kube-system | grep dns coredns-7f6cbbb7b8-ncd2s 1/1 Running 13 (3d19h ago) 24d k8s-app=kube-dns,pod-template-hash=7f6cbbb7b8 coredns-7f6cbbb7b8-pjnct 1/1 Running 13 (3d19h ago) 24d k8s-app=kube-dns,pod-template-hash=7f6cbbb7b8

配置两个出去规则,一个到pod2,一个到kube-dns,使用不同端口协议

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: test-network-policy spec: podSelector: matchLabels: run: pod1 policyTypes: - Egress egress: - to: - podSelector: matchLabels: run: pod2 ports: - protocol: TCP port: 80 - to: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: kube-system podSelector: matchLabels: k8s-app: kube-dns ports: - protocol: UDP port: 53

测试可以通过域名访问

1 2 3 4 5 6 7 8 9 10 11 12 13 14 ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$vim networkpolicy2.yaml ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl apply -f networkpolicy2.yaml networkpolicy.networking.k8s.io/test-network-policy configured ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl get networkpolicies NAME POD-SELECTOR AGE test-network-policy run=pod1 3h38m ┌──[root@vms81.liruilongs.github.io]-[~/ansible/k8s-network-create] └─$kubectl exec -it pod1 -- bash root@pod1:/ pod2 root@pod1:/

时间关系,关于网络策略和小伙伴们分享到这里,生活加油